I am running my own mail server for a while now. Since the beginning I was thinking about how to store the mails encrypted, so that no one can read the mails with access to the server. The solution I came up with is relative easy to setup and is based upon OpenPGP/GnuPGP.

The basic idea is to take incoming mail before it is stored and encrypt it. I'm running postfix, which has the option to filter queued mails with external content filters. A content filter gets a mail via stdin, does whatever it needs to do and either rejects a mail or put it back into the mail queue.

I wrote a relativ simple Python script that takes a mail from stdin, processes it and then writes it back to stdout. The script can either decrypt, encrypt, sign or sign and encrypt a mail. It also tries to protect the mail headers following the memoryhole specs and supports Thunderbirds/Enigmails encrypted subject feature. The drawback is that Enigmail only supports the encrypted header from the memoryhole specs and other mail clients don't support them at all. For the content_filter in postfix I wrote a Bash script, that will resend the encrypted mail to put it back into the mail queue. The scripts can be found on GitHub.

Setup

- Install

gpgmail

-

Add a new user:

adduser --shell /bin/false --home /home/gpgmail --disabled-password --disabled-login --gecos "" gpgmail

-

Create .gnupg folder and change permissions:

mkdir /home/gpgmail/.gnupg

chown gpgmail:gpgmail /home/gpgmail/.gnupg/

chmod 700 /home/gpgmail/.gnupg/

-

If mails should not just get encrypted but also signed, create a new key pair:

sudo -u gpgmail /usr/bin/gpg --homedir=/home/gpgmail/.gnupg --expert --full-gen-key

-

Import public keys and chnage trust:

sudo -u gpgmail /usr/bin/gpg --homedir=/home/gpgmail/.gnupg --import /home/gpgmail/pubkey.asc

sudo -u gpgmail /usr/bin/gpg --homedir=/home/gpgmail/.gnupg --edit-key <KEY> trust save

sudo -u gpgmail /usr/bin/gpg --homedir=/home/gpgmail/.gnupg --edit-key <KEY> trust quit

-

Edit /etc/postfix/master.cf

:::unixconfig

smtp inet n - y - - smtpd -o content_filter=gpgmail-pipe

smtps inet n - y - - smtpd -o content_filter=gpgmail-pipe

submission inet n - y - - smtpd -o content_filter=gpgmail-pipe

gpgmail-pipe unix - n n - - pipe

flags=Rq user=gpgmail argv=/usr/bin/gpgmail-postfix sign-encrypt gnupghome=/home/gpgmail/.gnupg key=<KEY_ID> passphrase=<PASSPHRASE> encrypt-subject -oi -f ${sender} ${recipient}

-

Restart postfix.

Sources

I must sadly announce the end of life for TIMA. Or at least the end of the TIMA website at https://tima.jnphilipp.org. This is due to the practically non existent traffic and my inability to maintain the site. The EOL will be at the end of the month, the 30th of September 2016. I will upload a database dump with the associations to this post after the shutdown.

Update: So the EOL of the TIMA website is reached. As promised a dump of the associations can be downloaded here as a JSON-file. For each word the language, count, identifier and associations are given, here the count indicates how often the word was answered. An association has the same informations, but here the count indicates how often the association was given to the word.

Since my last Post about TIMA a few thing have happened and changed. We added and FAQ page, and most noticeably a we added a section with games to the Website. Currently there is only one: AssociationChain.

AssociationChain is a simple game in which you and TIMA build an association chain together. The rules are as follows: You and TIMA alternately associate a word to the previous association. The goal is to build long chains.

As for the Apps it's a work in progress. The basic functionally of the Website is in the App and works we'll see that rest get's into it an that we can distribute it. As for the game Apps that will take some more time.

As for the TIMA itself. We currently support four languages: German, English, Spanish and Farsi. We have a total of over 3400 words and over 4000 unique associations.

I'm sorry I'm a little late with this, but I finally came around to write this post. In the last term I took a course were we had to write a simulated web crawler and implement different crawling strategies. The complete code and detailed descriptions on inputs and how to compile and run it are on GitHub.

First we had to implement breadth-first search strategy and then two page level (backlink-count and OPIC) and two site level (round robin and max page-priority) strategies, which should be combianed as desired. And finally we should use OPIC, backlink-count and the ratio of good to bad pages to develop a formula to combine them to a strategy called OPTIMAl.

On the first run two input files need to be provided, the first on is the link graph and the second one the quality mapping. Bevor the actual crawling starts, the files will be read an stored in a MapDB for easy access. As long as the MapDB files exist there is no need to provide the link graph and quality mapping file. If they are provided the MapDB will be recreated.

For performance reason the crawling itself is done in threads via a ScheduledThreadPoolExecutor. A single thread performce the crawling of a single site.

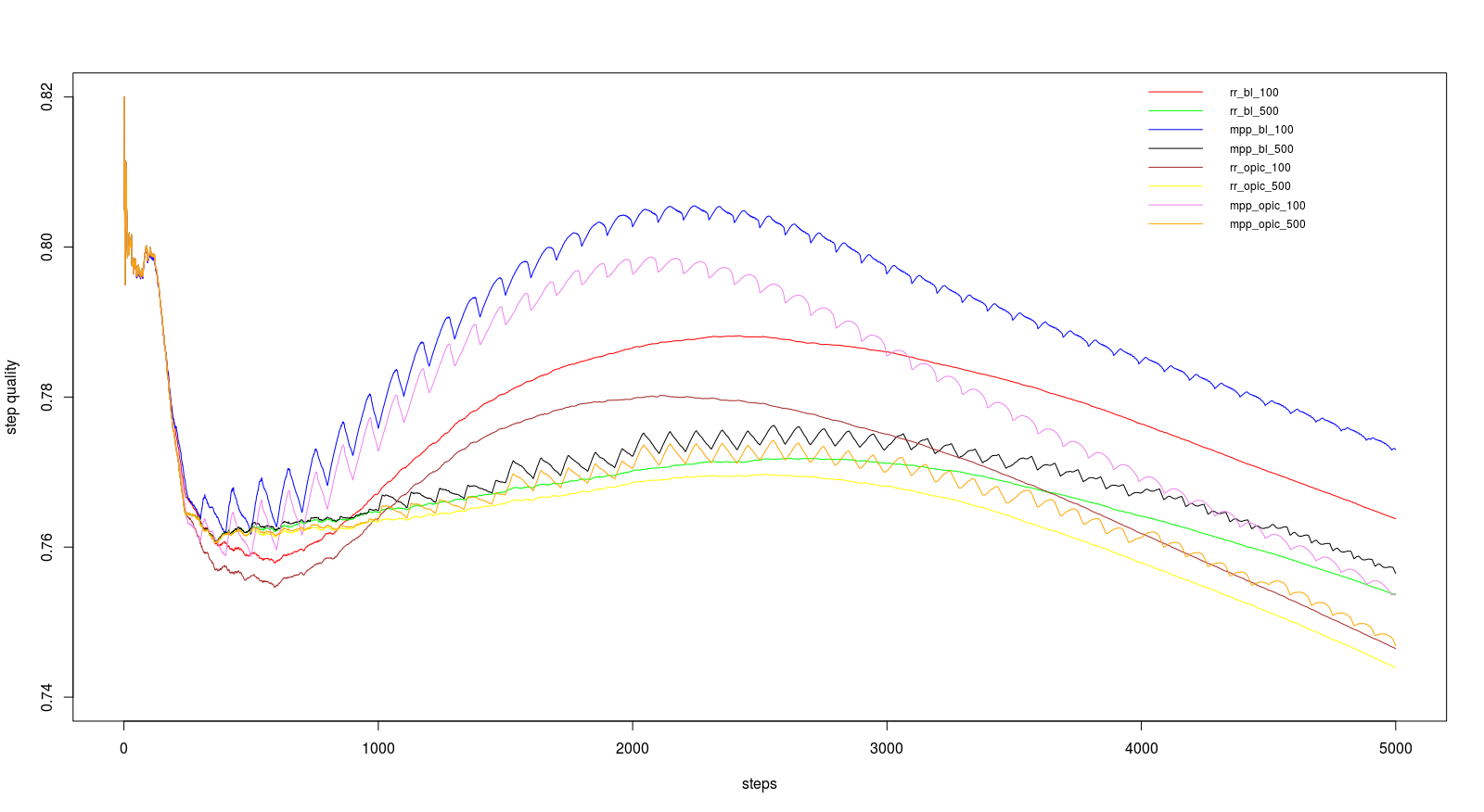

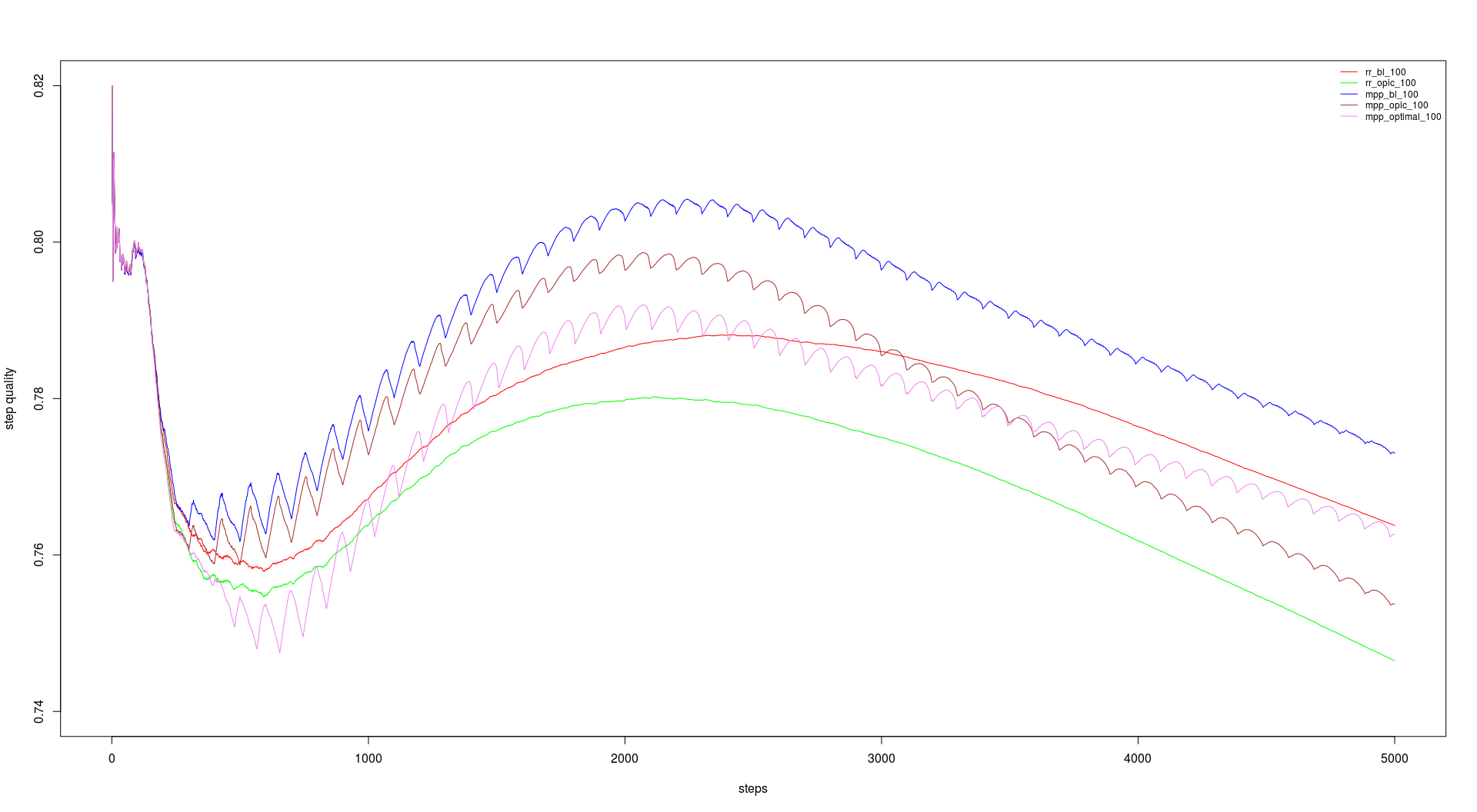

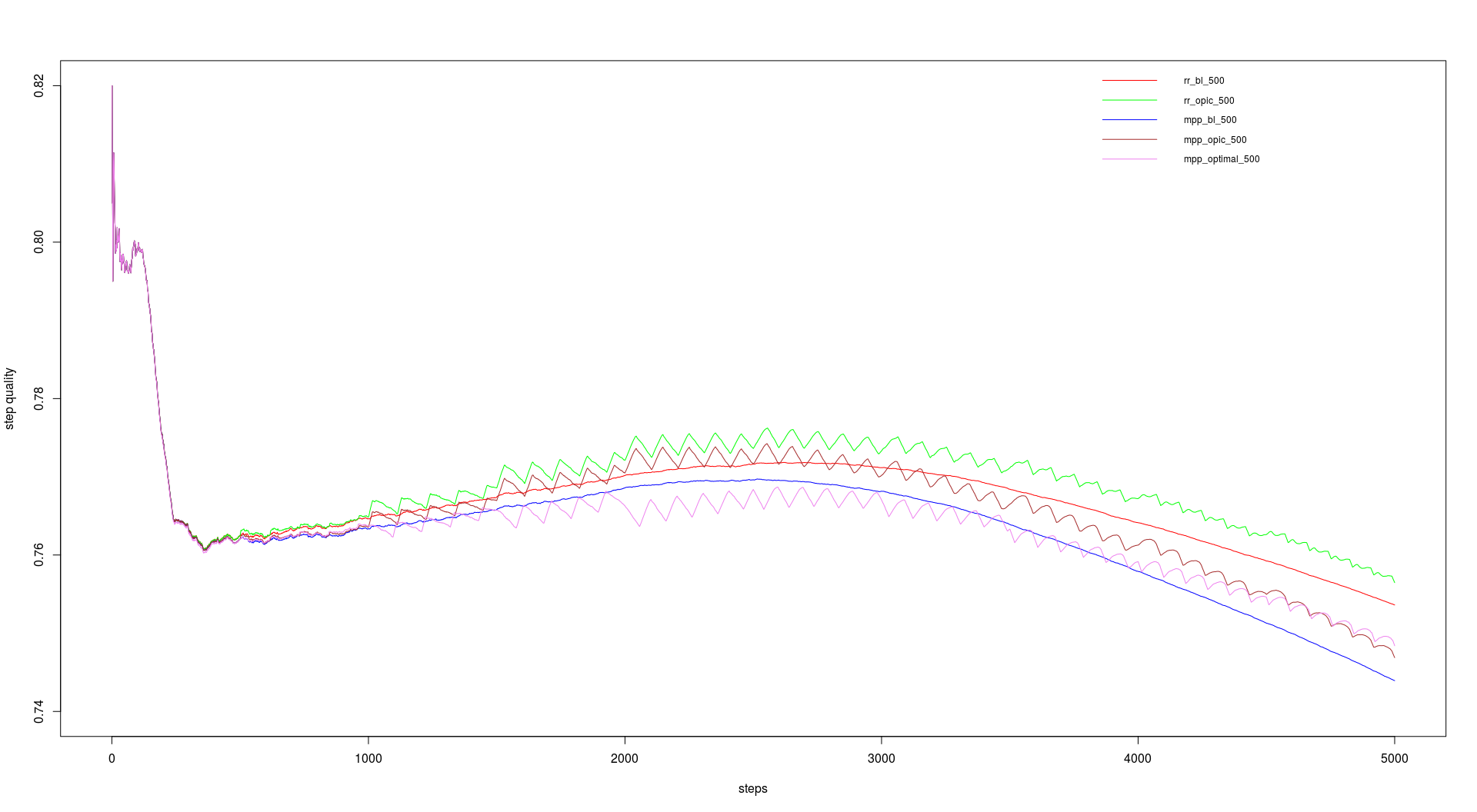

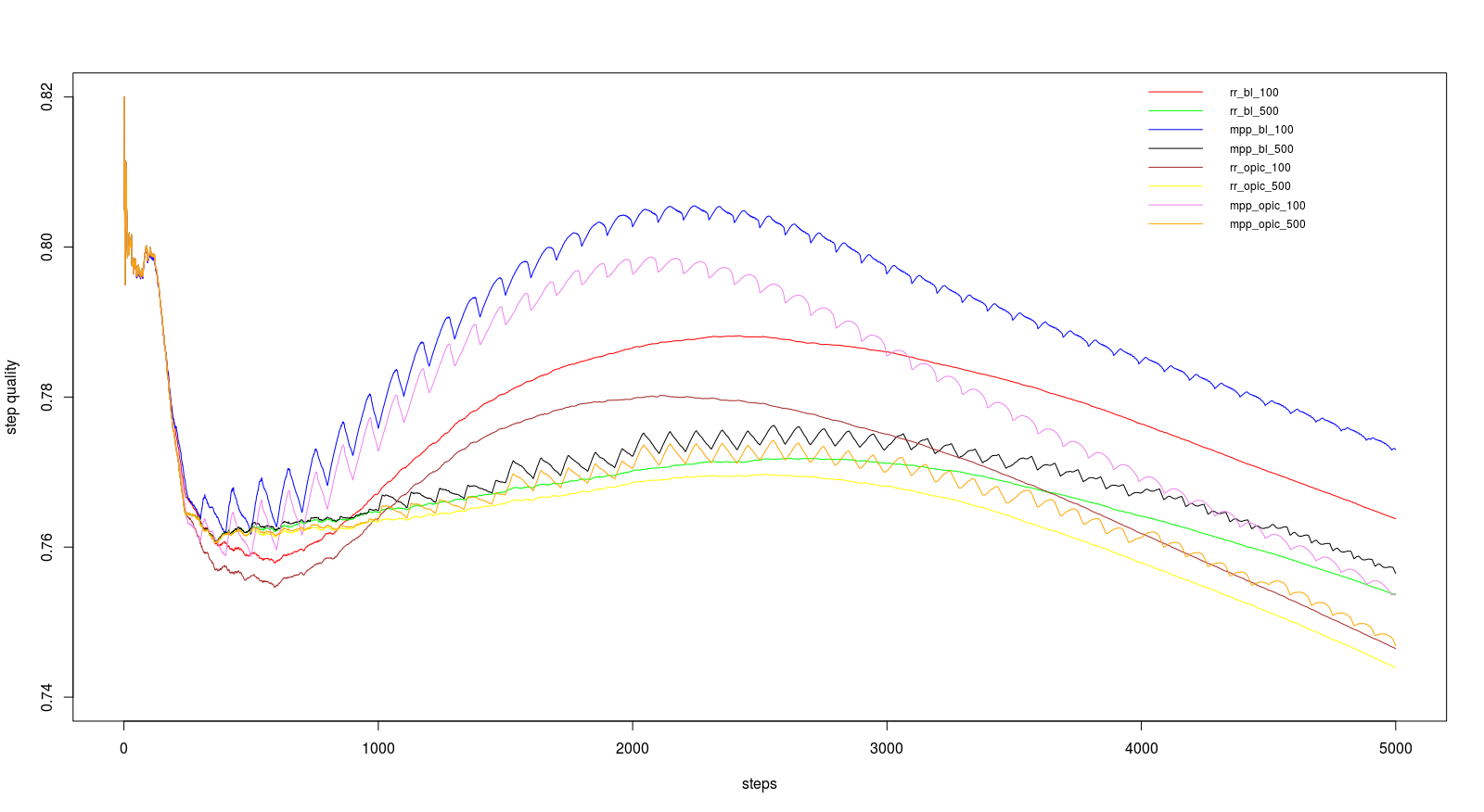

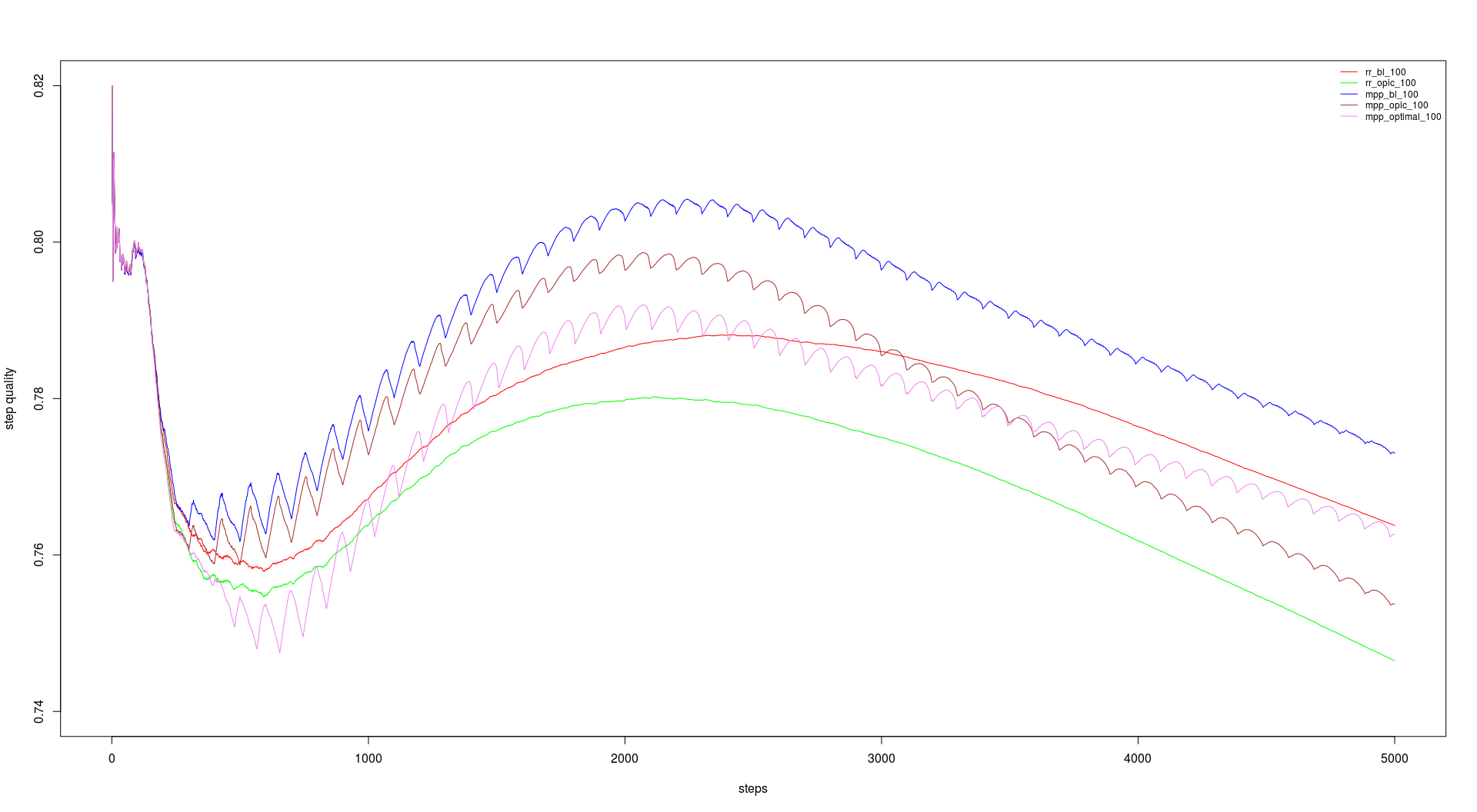

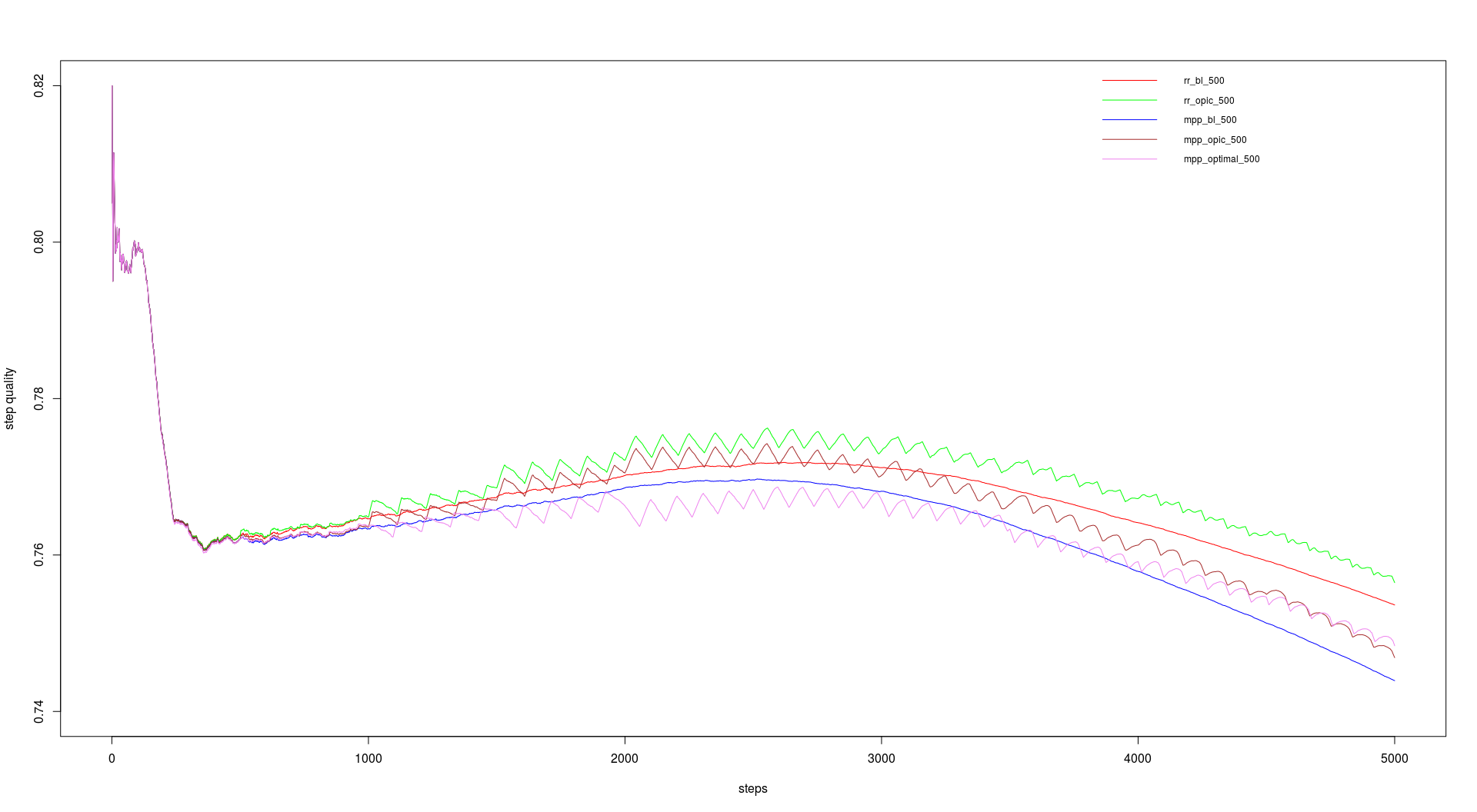

For the course we had a link graph with about 230 million entries (including duplicates) on which we should run our tests. We should do 5000 steps with 200 URLs per step and a batch size of 100 and 500. The batch size dictates the update intervalls for backlink count and OPIC. The runtimes are in the table below and the performance in the graphs.

- breath-first search: 1:11.241s

- round robin, backlink count, batch size: 100: 3:03.407s

- round robin, backlink count, batch size: 500: 2:59.153s

- round robin, OPIC, batch size: 100: 3:35.574s

- round robin, OPIC, batch size: 500: 3:06.375s

- max page priority, OPIC, batch size: 100: 3:30.296s

- max page priority, OPIC, batch size: 500: 3:03.940s

- max page priority, backlink count, batch size: 100: 3:14.608s

- max page priority, backlink count, batch size: 500: 2:17.481s

The last few weeks I worked with some people on a smart meter project. Our goal was to show how to receive the data on a large scale and to handle them. We divided it into three parts, the first was a generator for generating lifelike data. The second part was based on Apache Storm and Apache Accumulo received the data and stored them and the third part generated reports with Map Reduce.

The code can be found on github: